Make AI in space make sense

#HYPERVIEW2 // EASi // ECAI

As we increasingly rely on AI for satellite operations, Earth observation, and mission-critical decisions, the question isn’t just what AI predicts – but why. EASi and HYPERVIEW 2 by KP Labs, MI² (Warsaw University of Technology), Poznan University of Technology and ESA Φ-Lab tackle the frontier of Explainable AI (XAI) for space, spotlighting the urgent need for trust, clarity, and accountability in high-stakes environments.

From understanding atmospheric changes to operating spacecraft autonomously, AI decisions impact real missions and real lives. But without transparency, we risk losing trust in these systems—especially when lives, missions, or environmental decisions are on the line.

With HYPERVIEW2, we’re challenging researchers, engineers, and data scientists to explore new ways of making AI interpretable and explainable for satellite data processing, space operations, and Earth monitoring.

Challenge? Workshop? Both!

The new HYPERVIEW2 Challenge is implemented as part of the Explainable AI in Space (EASi) workshop, that KP Labs, ESA, and the Φ-Lab Challenges team are organising as part of the European Conference on Artificial Intelligence (ECAI), which is hosted in Bologna, Italy from 25th–30th October 2025.

Participants are welcome to engage in multiple ways: you can take part in the challenge only, submit a paper to the workshop only, or combine both for a deeper contribution. Submitting a paper is not required to join the challenge, and vice versa—though we strongly encourage participants in the challenge to consider sharing their methods and insights through a workshop submission. This flexible format allows researchers, engineers, and AI practitioners to participate in the way that best suits their expertise and goals. Whether you’re aiming to publish, to compete, or to do both, EASi and HYPERVIEW2 provide a shared platform to advance the frontiers of trustworthy, explainable AI in space applications.

Transparency beyond the stratosphere

As space exploration and Earth observation increasingly rely on artificial intelligence (AI) for mission-critical tasks such as satellite operations, data analysis, and decision-making, ensuring the reliability, interpretability, and accountability of these AI systems becomes paramount. The EASi workshop addresses a critical intersection between Explainable Artificial Intelligence (XAI) and space applications, offering broad relevance to multiple research and industrial communities beyond traditional AI approaches. We promote methods to make AI models more explainable and transparent, particularly in safety-critical and high-stakes environments like space, targeting the topics having far-reaching implications across various sectors:

- Aerospace: The safety, reliability & transparency of AI in space applications impact both governmental space agencies and private sector efforts. Ensuring that AI-driven systems can be trusted, explained, and verified in mission-critical environments is crucial to avoiding catastrophic failures & ensuring public trust.

- Earth Observation and Environmental Sciences: With AI systems playing an increasing role in analyzing vast amounts of satellite imagery and data to monitor climate change, disasters, and ecosystem health, explainability and interpretability are essential for accurate, reliable insights. Transparency in AI decisions allows scientists, policymakers, and stakeholders to trust and act on AI-generated insights. Decision Intelligence further enhances this process by integrating AI-driven insights with human expertise, enabling more informed and effective decision-making in addressing environmental challenges.

- AI Research Community: The methods and techniques discussed are applicable beyond space. They offer valuable insights for improving AI transparency in other fields such as finance, healthcare, robotics, and automotive industries, where trust in AI decisions is critical.

The topics of interest at EASi include, but are not limited to:

“Where XAI meets space”

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

“XAI techniques and tools for (not only) space”

|

|

|

|

|

|

|

Programme

The full EASi program can be found here.

Keynote speaker

Riccardo Guidotti, Associate Professor at the University of Pisa

|

Short bio: Riccardo was born in 1988 in Pitigliano (GR) Italy. In 2013 and 2010 he graduated cum laude in Computer Science (MS and BS) at University of Pisa. He received the PhD in Computer Science with a thesis on Personal Data Analytics in the same institution. He is currently an Associate Professor (RTD-B) at the Department of Computer Science University of Pisa, Italy and a member of the Knowledge Discovery and Data Mining Laboratory (KDDLab), a joint research group with the Information Science and Technology Institute of the National Research Council in Pisa. He won the IBM fellowship program and has been an intern in IBM Research Dublin, Ireland in 2015. His interests are in personal data mining, clustering, explainable models, analysis of transactional data. |

Roberto Del Prete, Internal Research Fellow at the ESA Φ-lab

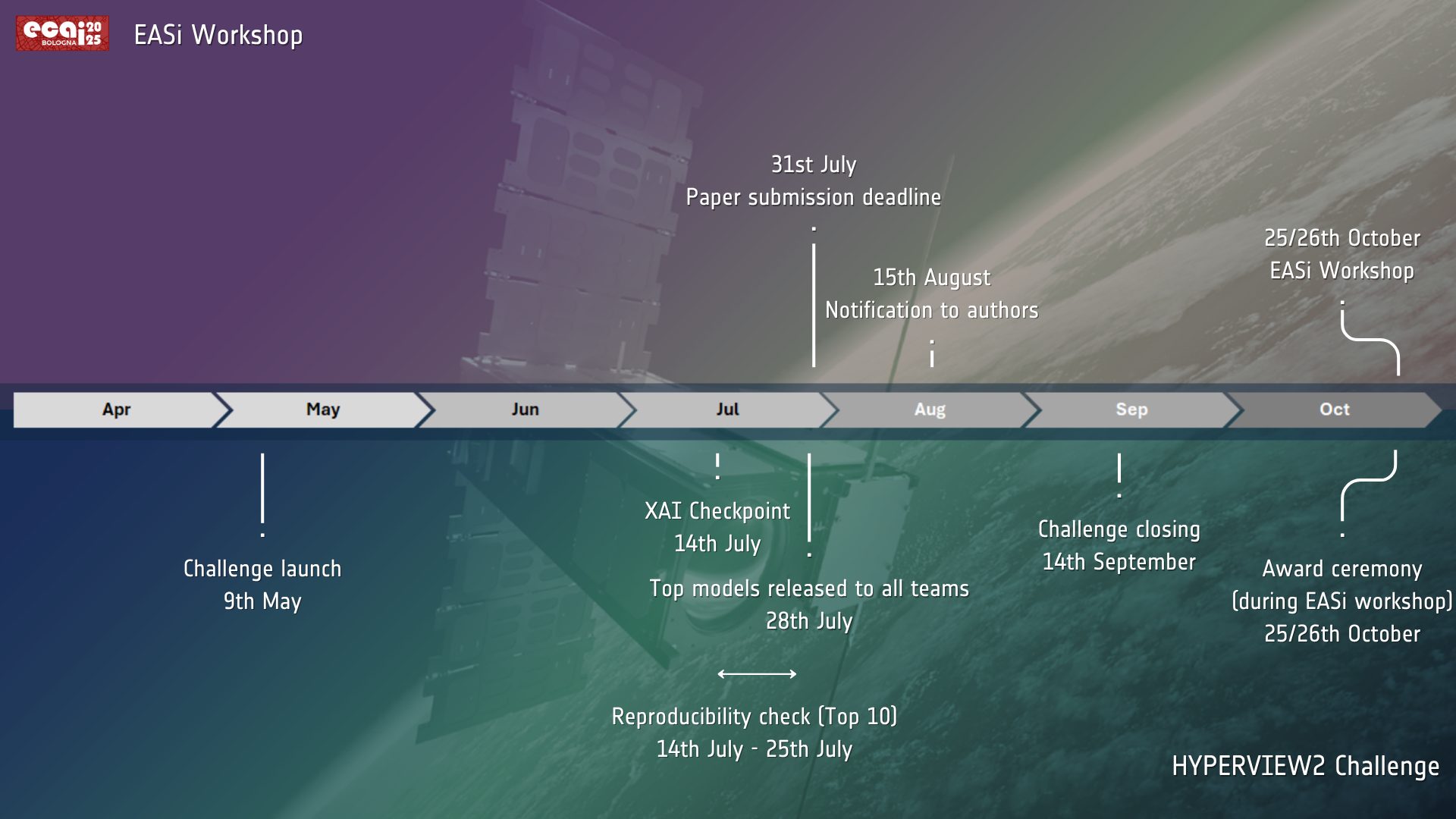

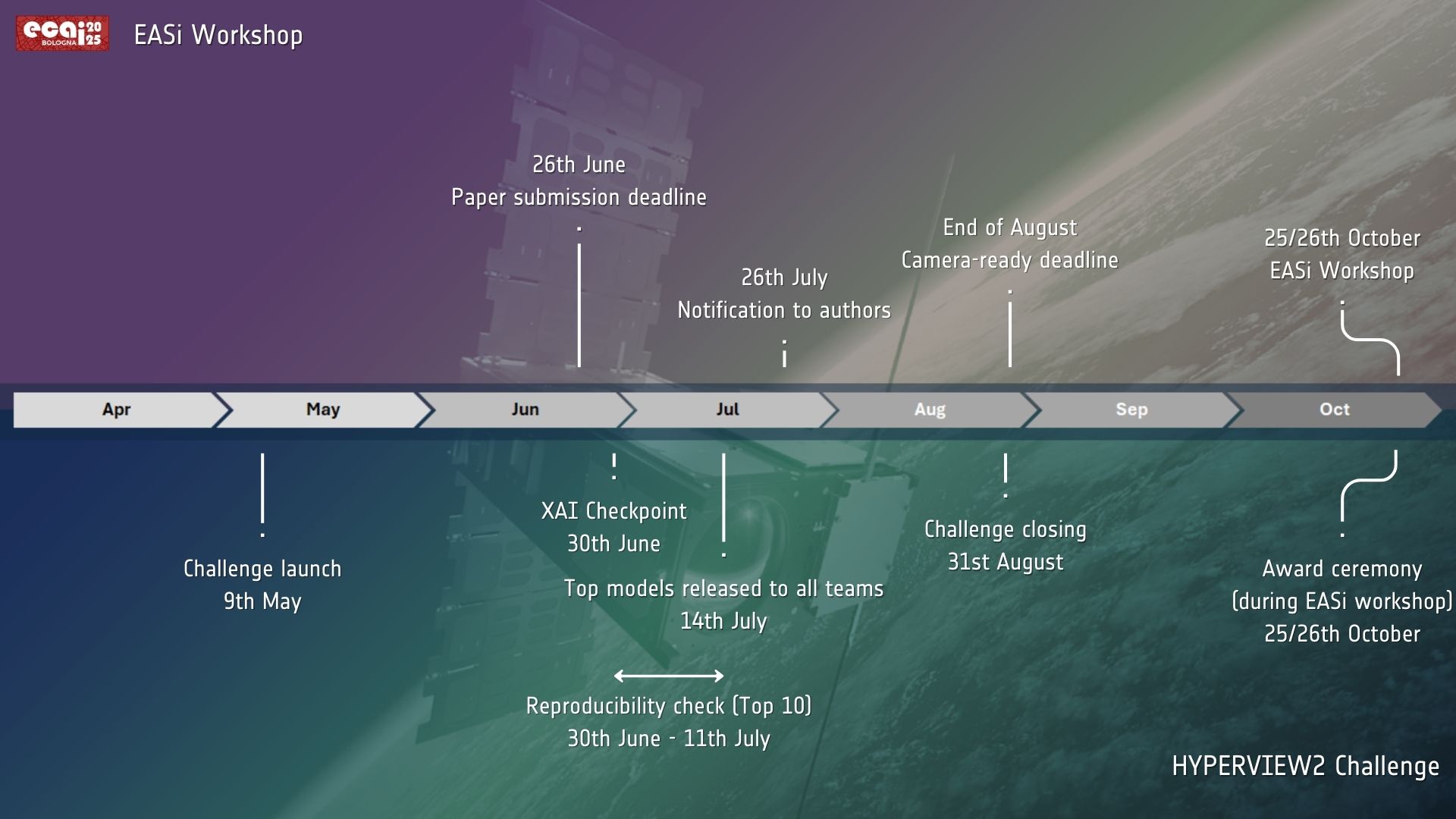

Important dates

Please note that we have deadlines to meet both for your participation at the EASi workshop as well as for the HYPERVIEW2 deadline. Please note that any dates or times are referring to European Central Summer Time (CEST).

EASi paper submission time and deadlines

|

HYPERVIEW2 Challenge time and deadlines

|

1 Checkpoint to take top-5 best models for XAI.

2 Verification of reproducibility of top-10 best models. The top 10 best performing teams will be asked to submit thier Jupyter notebooks with their models.

Submission instructions & proceedings

Papers must be written in English, be prepared for double-blind review using the EASi LaTeX/Word template, and not exceed 14 pages (plus at most 2 extra page for references).

The LaTeX template: LaTeX2e+Proceedings+Templates+download

The Word template: Microsoft+Word+Proceedings+Templates

The submission website is: https://easychair.org/conferences?conf=easi2025

The accepted papers presented at EASi will be published in the Communications in Computer and Information Science (CCIS) series by Springer.

Prizes for the HYPERVIEW2 Challenge

Your work will be rewarded. Despite further boosting your reputation and gaining the recognition of an international AI and EO expert community (and of course also honing your skills when working on AI and EO), KP Labs and the ESA Φ-lab share a sweet hands-on prize for the three top scoring teams:

- 1st Place: 2000 EUR + 1-month access to Leopard DPU by KP Labs through Smart Mission Lab to benchmark the models on flight hardware with KP Labs’ support (+ an option to widely promote the benchmarking results through KP Labs’ social media) + diploma

- 2nd Place: 1000 EUR + 1-month access to Leopard DPU by KP Labs through Smart Mission Lab to benchmark the models on flight hardware + diploma

- 3rd Place: 500 EUR + 1-month access to Leopard DPU by KP Labs through Smart Mission Lab to benchmark the models on flight hardware + diploma

So, what are you waiting for?

🔗 Intrigued? Follow the link , find your team, and join the challenge today.

Meet the team

Want a sneak peek behind the scenes? Meet the team of international AI and Earth Observation data science experts who have worked tirelessly to bring this exciting challenge to life.

Organizers and Chairs

|

Przemysław Biecek Warsaw University of Technology, MI².AI Poland |

|

Marek Kraft Poznan University of Technology, Poland |

|

Nicolas Longépé Φ-Lab, European Space Agency Italy |

|

Jakub Nalepa Silesian University of Technology, KP Labs Poland |

|

Evridiki Ntagiou European Space Operations Centre, European Space Agency Germany |

|

Lukasz Tulczyjew |

|

Agata M. Wijata Silesian University of Technology, KP Labs Poland |

Technical and Scientific Co-Chairs

|

Przemyslaw Aszkowski Poznan University of Technology Poland |

|

Hubert Baniecki University of Warsaw Poland |

|

Gabriele Cavallaro University of Iceland, Forschungszentrum Jülich Germany |

|

Mihai Datcu University Politehnica Bucharest Romania |

|

Nataliia Kussul University of Maryland United States of America |

|

Tymoteusz Kwieciński Warsaw University of Technology Poland |

|

Ribana Roscher Forschungszentrum Jülich, University of Bonn Germany |

|

Bogdan Ruszczak Opole University of Technology Poland |

|

Vladimir Zaigrajew MI².AI Poland |

Scientific Committee

|

|

Why should you join?

Drive clarity in the cosmos

Redefine what explainability means for real-world, mission-critical AI systems in space missions.

Merge ethics and engineering

Design AI that works – and makes sense to humans

Build trust from the ground up

Shape the future of responsible AI in space, Earth observation and beyond